The authors would like to acknowledge the invaluable assistance of the following team members in achieving the results discussed in this article: Bill Grosso, Pallas Horwitz, Jordan Nafa, Chase Ruyle, James Sprinkle, Taylor Steil, and John Szeder.

In game publishing, revenue optimization allows development teams, designers, and artists to make money from their creative work. The proceeds pay for ongoing and fund future work. A key challenge with this undertaking is that players’ preferences and behaviors continuously evolve, and so marketing strategies must adapt to engage players and monetize game content effectively.

Multi-armed bandit (MAB) algorithms have emerged as a powerful tool in this quest [1, 2]. MABs optimize across a number of given variants, e.g., different ads, offers, content, or other elements of the player experience. They roll out the variant that maximizes a specified reward, e.g., views, click-throughs, or purchases. The more arms an MAB has, the more options it can consider for optimization. Besbes, Gur, and Zeevi [3] succinctly summarize the challenge for the bandit: to optimize effectively, it needs “to acquire information about arms (exploration) while simultaneously optimizing immediate rewards (exploitation); the price paid due to this trade-off is often referred to as the regret, and the main question is how small can this price be as a function of the horizon length T.”

If the bandit also has access to contextual data describing different contexts within which it can optimize, it is called a contextual MAB. Then, the bandit can find the optimal variant conditional on this data, converging to an optimal personalized strategy [4]. Li et al. [4] describe this for the case of news article recommendation: the “algorithm sequentially selects articles to serve users based on contextual information about the users and articles, while simultaneously adapting its article-selection strategy based on user-click feedback to maximize total user clicks.”

In this article, we explore the application of MABs across the 4Ps of the marketing mix in games: Product, Price, Promotion, and Place. It first addresses MAB applications to each of the 4Ps in the context of digitally published games, then discusses how bandits can help orchestrate activities “on the fly,” drawing on John White’s “Moving Worlds” concept [2] and a recent case study conducted by Game Data Pros.

Digital games today are often published under the free-to-play model. Throughout this article, we often refer to free-to-play games without explicitly mentioning them.

Bandit Algorithms in Game Product Optimization

The product is at the heart of the game experience, encompassing game mechanics, storytelling, difficulty levels, and progression systems. MABs can support product optimization by dynamically personalizing the gaming experience based on player interactions. E.g., a key optimization problem in the product dimension of the game marketing mix is game difficulty, often personalized via so-called dynamic difficulty adaptation (DDA) systems [5].

Bandit algorithms can help steer such systems [6]. They can adjust game difficulty on the fly, ensuring that players are constantly challenged yet not frustrated. By analyzing player performance data, these algorithms can identify patterns and modulate the game’s difficulty to maintain an optimal balance between challenge and enjoyment. This approach can lead to higher player retention and increased revenue through sustained engagement [5].

Similarly, MAB algorithms can tailor other parts of a game, e.g., design elements, narratives, and character interactions, to align with individual player preferences [7].

Game Price and Promotion Optimization with Bandit Algorithms

Pricing and promotion strategies are critical components of a game’s revenue model, especially in free-to-play games using the in-app purchase model [8]. MAB algorithms offer a robust framework for optimizing these elements by continuously learning from player responses to various pricing and promotional offers [9].

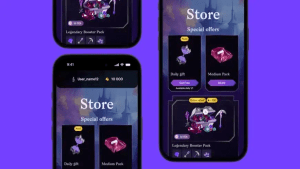

In the realm of in-game purchases, bandit algorithms can target and customize offers based on player behavior and purchasing patterns. For example, a player who frequently buys cosmetic items might be more responsive to exclusive, time-limited offers on new skins or outfits. MABs can dynamically adjust the frequency, type, and timing of promotional offers to maximize in-game purchase conversion rates and revenue. Additionally, MABs can optimize shop designs, ensuring that the most appealing and profitable items are prominently featured based on real-time player preferences.

Starter packs (or beginner bundles) are crucial elements of free-to-play game monetization. They provide a premium experience tailored to the early game and help players onboard successfully with a game. Game Data Pros just concluded a research collaboration with academics where we investigated the application of bandit methods for the targeting of such packs. The paper was published a week ago as part of the proceedings of the 17th IEEE Conference on Games [10]. Give it a read!

Optimizing Place in the Game Marketing Mix with Bandit Algorithms

The concept of “place” in the marketing mix refers to the distribution channels and touchpoints through which players interact with and learn about (new) game content. MAB algorithms can optimize across these touchpoints to enhance the overall player experience and drive revenue growth. E.g., advertising and cross-promotion are key areas in which MABs can excel. By analyzing player engagement data, these algorithms can determine the most effective ad creatives, formats, and placements [11]. This ensures that players are exposed to ads that are not only relevant but also likely to generate higher click-through rates and conversions.

One key application in this area is the timing and frequency of in-game pop-ups, ads, and informational content. Bandit algorithms can analyze player interaction data to determine the optimal moments to present these elements. By doing so, they ensure that players receive relevant and timely content without feeling overwhelmed or interrupted, thereby enhancing engagement and reducing churn.

Moreover, contextual bandit algorithms can optimize the distribution of story elements, ads, and game content across different player segments [4]. By identifying the most effective touchpoints for each segment, these algorithms ensure that players receive content that resonates with their preferences and enhances their overall gaming experience.

“Moving Worlds” and Orchestration in Multidimensional Optimization

The coordination of different marketing activities is crucial to achieving a cohesive strategy. Effective orchestration ensures that the various dimensions—Product, Price, Promotion, and Place—work harmoniously to achieve overarching marketing goals. For instance, aligning user acquisition campaigns with in-game monetization strategies can lead to more efficient spending and higher returns on investment [12].

This orchestration is a multidimensional optimization problem that is not fully known. In reality, the hope is that many smaller, separate optimizations will approximate and get close to an overall optimal solution. Across these optimizations, interactions and relevant factors often only emerge at runtime and are unknown ex-ante.

John White calls this phenomenon “Moving Worlds” [2] and notes that “the value of different arms in a bandit problem can easily change over time” (p. 63). MABs afford us an important advantage over more static methods here: if appropriately configured, they can learn about emergent external factors and adapt the optimization strategy accordingly.

We just ran a large-scale MAB for a cross-promotion campaign that highlighted this advantage. Let’s dive in and take a closer look at how this worked.

Case Study: How a Bandit Adapted to a “Moving World”

To illustrate a practical application, let’s delve into a recent case study in which Game Data Pros utilized an MAB algorithm to optimize ad creatives for the cross-promotion of a new major game title. This case study demonstrates how bandit algorithms can adapt to a “Moving World” and achieve effective orchestration of emergent interaction effects between different marketing initiatives.

Working with a large game publisher, we faced the challenge of promoting a new major game title to the existing player base. The goal was to identify the most effective ad creatives for cross-promotion, maximizing player engagement and conversion rates. We used an MAB algorithm to optimize across different ad creatives, focusing on the color scheme and the in-game character used to advertise the new game.

As the bandit algorithm went live, it initially identified a creative variant that resonated well with the target audience. However, during the campaign, other marketing activities, such as social media promotions and influencer partnerships, impacted and shifted player preferences. These activities highlighted specific features of the new game title, making players more receptive to ad creatives that aligned with this new messaging.

Recognizing this shift, the MAB adapted quickly, reallocating exposure to the creatives that reflected the updated messaging. This dynamic adaptation ensured that the cross-promotion campaign rolled out a creative variant with 30% higher click-through than the worst-performing variant. Under a naïve strategy, e.g., mixing across ad variants with equal probability, player engagement with the ads (as measured by players clicking the ad) would have been substantially lower.

This case study underscores the importance of using MABs in a “Moving World.” The ability to adapt to changing player preferences and align with other marketing activities is crucial for maximizing the effectiveness of cross-promotion campaigns. By leveraging MAB algorithms, game developers can ensure that their marketing strategies remain agile and responsive, driving sustained revenue growth.

Method: How Did the Bandit Do It?

In any decision-making process, we face a dilemma between two strategies: exploration, in which we learn as much as possible about the available options, and exploitation, in which we choose the best option based on our current knowledge of the available options [3]. These strategies are naturally opposed. By exploring possible options, we are potentially missing out on rewards that could be exploited now. By exploiting our current knowledge of the best outcome, we are potentially missing out on future rewards that could be discovered through exploration. The goal of any bandit algorithm is to strike a balance between exploration and exploitation such that we maximize the total reward (minimize the total regret [3]).

In this case study, we used four ads—two in-game characters on either a light or dark background—and a simple MAB algorithm called Thompson Sampling [13] to balance exploration and exploitation and optimize the click-through rate. In short, Thompson Sampling allows the bandit to balance the exploration/exploitation tradeoff by assigning users ads based on their probability of having the best click-through rate. For example, if we are 75% sure that an ad has the best click-through rate, the bandit will show that ad to 75% of players, while the remaining 25% of traffic will be used to explore other options.

Initially, all ads are shown to players in equal proportion: 25% of traffic for each (see Figure 4). As players are shown the ad variants, the bandit updates the traffic distribution based on observed click-through behavior. As more data is collected, the bandit detects differences in click-through rates between different ads. Eventually, the algorithm converges and directs nearly all incoming traffic to the best-performing ad.

In this case study, the light ad variants had a lower click-through rate than the dark variants and were, thus, discarded quickly. Character B, on a dark background, was ahead initially, then Character A—fueled by other marketing activities—took over and drove home the win. The MAB automatically orchestrated with the other marketing activities and changed what ad variant received the most traffic.

Conclusion

MAB algorithms offer a powerful solution for optimizing revenue across the 4Ps of the free-to-play game marketing mix. By dynamically personalizing product experiences, optimizing pricing and promotions, and enhancing the effectiveness of distribution touchpoints, these algorithms can drive sustainable engagement and revenue growth. Moreover, the importance of orchestration cannot be overstated. The implicit capability of MABs to adapt to emergent interactions and a “Moving World” [2] makes them a must-have tool in free-to-play engagement and revenue optimization.

References

[1] Rothschild, Michael. “A two-armed bandit theory of market pricing.” Journal of Economic Theory 9, no. 2 (1974): 185-202.

[2] White, John. “Bandit algorithms for website optimization.” O’Reilly Media, Inc., 2013.

[3] Besbes, Omar, Yonatan Gur, and Assaf Zeevi. “Stochastic multi-armed-bandit problem with non-stationary rewards.” Advances in Neural Information Processing Systems 27 (2014).

[4] Li, Lihong, Wei Chu, John Langford, and Robert E. Schapire. “A contextual-bandit approach to personalized news article recommendation.” In Proceedings of the 19th International Conference on World Wide Web, pp. 661-670. 2010.

[5] Ascarza, Eva, Oded Netzer, and Julian Runge. “Personalized Game Design for Improved User Retention and Monetization in Freemium Mobile Games.” Available at SSRN 4653319 (2023). Personalized Game Design for Improved User Retention and Monetization in Freemium Mobile Games

[6] Missura, Olana. “Dynamic difficulty adjustment.” PhD diss., Universitäts- und Landesbibliothek Bonn, 2015.

[7] Amiri, Zahra, and Yoones A. Sekhavat. “Intelligent adjustment of game properties at run time using multi-armed bandits.” The Computer Games Journal 8, no. 3 (2019): 143-156.

[8] Waikar, S. “Why Free-to-Play Apps Can Ignore the Old Rules About Cutting Prices.” Stanford Business Insights, 2022. Why Free-to-Play Apps Can Ignore the Old Rules About Cutting Prices

[9] Misra, Kanishka, Eric M. Schwartz, and Jacob Abernethy. “Dynamic online pricing with incomplete information using multiarmed bandit experiments.” Marketing Science 38, no. 2 (2019): 226-252.

[10] Runge, Julian, Anders Drachen, and William Grosso. “Exploratory Bandit Experiments with ‘Starter Packs’ in a Free-to-Play Mobile Game.” Proceedings of the 17th IEEE Conference on Games (COG), 2024.

[11] Schwartz, Eric M., Eric T. Bradlow, and Peter S. Fader. “Customer acquisition via display advertising using multi-armed bandit experiments.” Marketing Science 36, no. 4 (2017): 500-522.

[12] William Grosso. The Origins of Revenue Optimization. Game Data Pros Blog, (2024). The Origins of Revenue Optimization | GDP

[13] Russo, Daniel J., Benjamin Van Roy, Abbas Kazerouni, Ian Osband, and Zheng Wen. “A tutorial on thompson sampling.” Foundations and Trends® in Machine Learning 11, no. 1 (2018): 1-96.